A Realtime Robotic Inventory System for Intelligent Planograms in Retail

INTRODUCTION

Techniques from Artificial Intelligence (AI) and Machine Learning (ML) are increasingly being used in the retail sector and have already proven to generate a substantial return-on-investment. Especially large retailers use the power of such methodologies to predict consumer spending, control their investments, and increase returns. However, the majority of current AI approaches only focus on information gathered from purchase and sales in order to make predictions and recommendations. Such approaches neglect the critical importance of the physical location of products, the geometric layout, and organization of the store, as well as typical movement patterns of the customers. One approach to do this is to create a planogram; a visual depiction that shows the placement of every product in the store. Such planograms can be very expensive and time- consuming in their creation. Most importantly, however, they are notoriously hard to maintain on a frequent basis, since this requires the store to be inventoried by human workers. In this project, we propose a robotic real-time inventory system that can generate such planograms.

VIDEOS OF OUR ROBOT

The following videos show our real-world robot in action, along with a simulation environment which we created for rapid development and testing. Our robot was nationally featured on CBS NEWS and was also featured in 196 local CBS affiliates from San Diego to New York. It was also live demonstrated at the 2019 Southwest Robotics Symposium.

PROJECT RESULTS

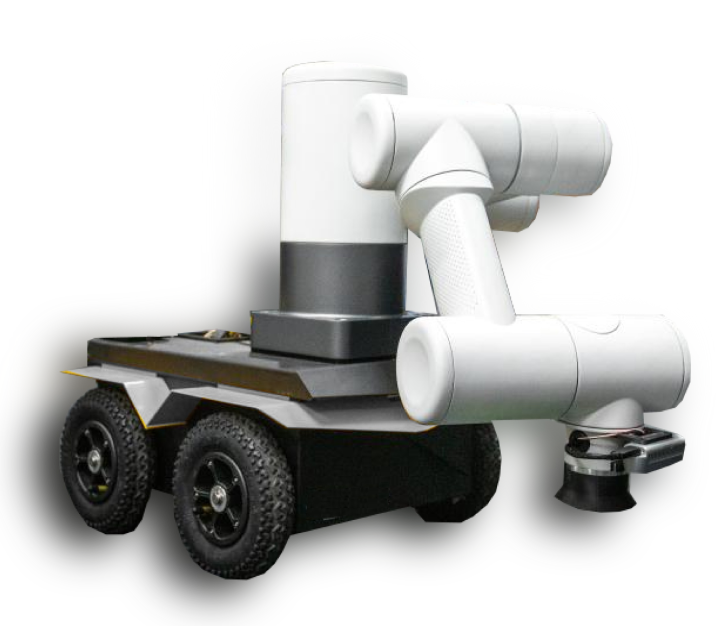

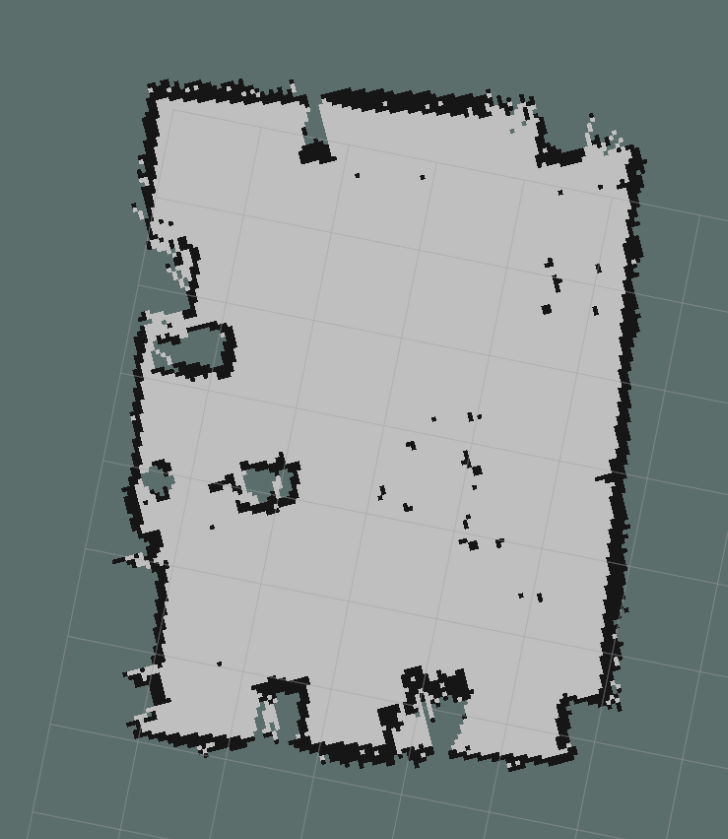

In this project we developed an automated inventory system that creates a realtime 3D map of the store and can control a mobile robot to different locations of the store. The result is a realtime planogram that can be used to answer important questions regarding product placement. Our system consists of a mobile robot as a front-end and a workstation in the backend. The most critical components are analysis algorithms based on AI technology that fuse recorded data within the Intel-powered back-end. The software stack developed within this project will be provided as Open-Source to the general public and can be found in the github link below.

Our control stack is a software layer that allows for the low-level access to the robot along with attached sensors, cameras, and RFID readers. The software layer will feature routines for controlling the mobile platform, as well as routines for moving the robot arm to arbitrary locations in space. In addition, a framework for behavior-based robot control will be implemented and populated with example primitives and behaviors. Among the typical behaviors to be used in the retail environment are move-to-point, avoid-obstacle, reach-to-point, scan-object etc. In addition to these behaviors, the framework also includes a basic path planner that can generate navigation paths from a given map of the environment. Note that path planning is executed in the backend. Hence, we will devise appropriate communication protocols between the robot and the backend to transmit critical information. Wee also aggregate all data provided by the robot, i.e., scanned items, their locations, point clouds, distance measurements etc. in order to yield a annotated 3D map of the environment.

INVERSE KINEMATICS AND CONTROL

GITHUB REPOSITORIES

SIMULATION ENVIRONMENT

POWERPOINT PRESENTATION

Use the button below to access a slide-presentation of the project in pdf. The presentation discusses origins and results:

LIVE DEMONSTRATION AT SOUTHWEST ROBOTICS SYMPOSIUM

ACKNOWLEDGMENTS AND FUNDING

Funding for this project is provided by a grant from Intel. We would like to thank our sponsor for their generous support and for the ongoing collaborations with Arizona State University.