Our Mission

The members of the Interactive Robotics Lab at Arizona State University explore the intersection of robotics, artificial intelligence, and human-robot interaction. Our main research focus is on the development of machine learning methods that allow humanoid robots to behave in an intelligent and autonomous manner. These robot learning techniques span a wide variety of approaches, such as supervised learning, reinforcement learning, imitation learning, or interaction learning. They enable robots to gradually increase their repertoire of skills, e.g., grasping objects, manipulation, or walking, without additional effort for a human programmer. We also develop methods that address the question of when and how to engage in a collaboration with a human partner. Our methods have been applied to a large number of different robots in the US, Europe, and Japan and have been shown to be particularly successful in enabling intelligent automotive manufacturing. The research group has strong collaborations with leading robotics and machine learning research groups across the globe.

Reinforcement Learning

Programming complex motor skills for humanoid robots can be a time intensive task, particularly within conventional textual or GUI-driven programming paradigms. Addressing this drawback, we develop machine learning methods for automatic acquisition of robot motor skills. Especially humanoid robots are often intrinsically redundant and can be controlled using a much smaller number of parameters than existing degrees-of-freedom. Using reinforcement learning, in particular policy search, as a basis, we introduced various methods for skill acquisition in humanoid robots. VIDEO

Interaction Learning

To engage in cooperative activities with human partners, robots have to possess basic interactive abilities and skills. However, programming such interactive skills is a challenging task, as each interaction partner can have different timing or an alternative way of executing movements. We proposed to learn interaction skills by observing how two humans engage in a similar task. To this end, we introduced a new representations such as “Interaction Primitives” and “Correlation-based Interaction Meshes.” VIDEO

Grasping and Manipulation

Grasping and manipulation of objects are essential motor skills for robots to interact with their environment and perform meaningful, physical tasks. Robots are still far from being capable of human-level manipulation skills including in-hand or bi-manual manipulation of objects, interactions with non-rigid objects, and multi-object tasks such as stacking and tool-usage. My research focuses on the application of machine learning and imitation learning techniques to allow robots precise and goal-oriented manipulation. VIDEO

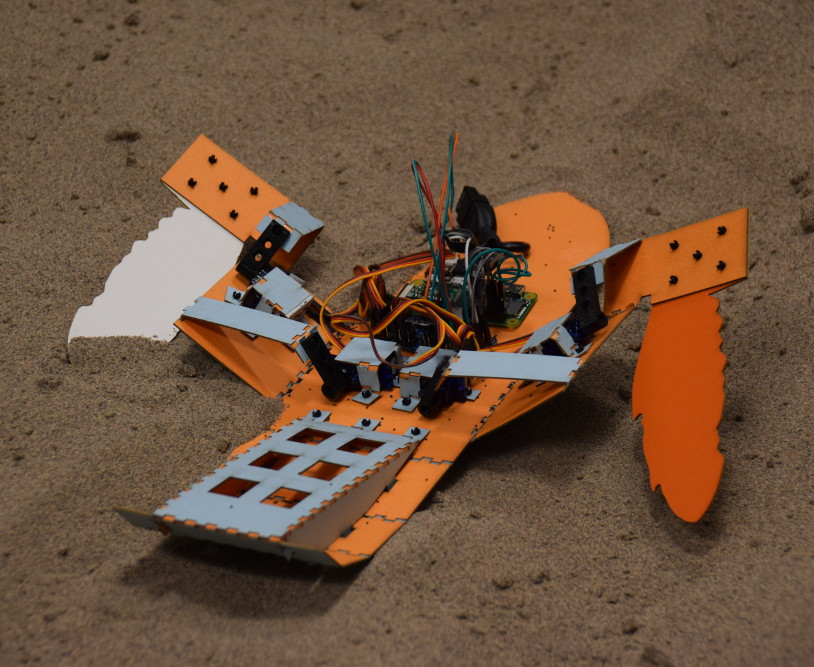

Robot Autonomy

Many new application domains such as autonomous driving require robots that are independent of any human input. Achieving such robot autonomy requires methods for life-long learning and adaptation. We are investigating new methodologies that allow robots to autonomously explore their environment and change their goal and objectives.