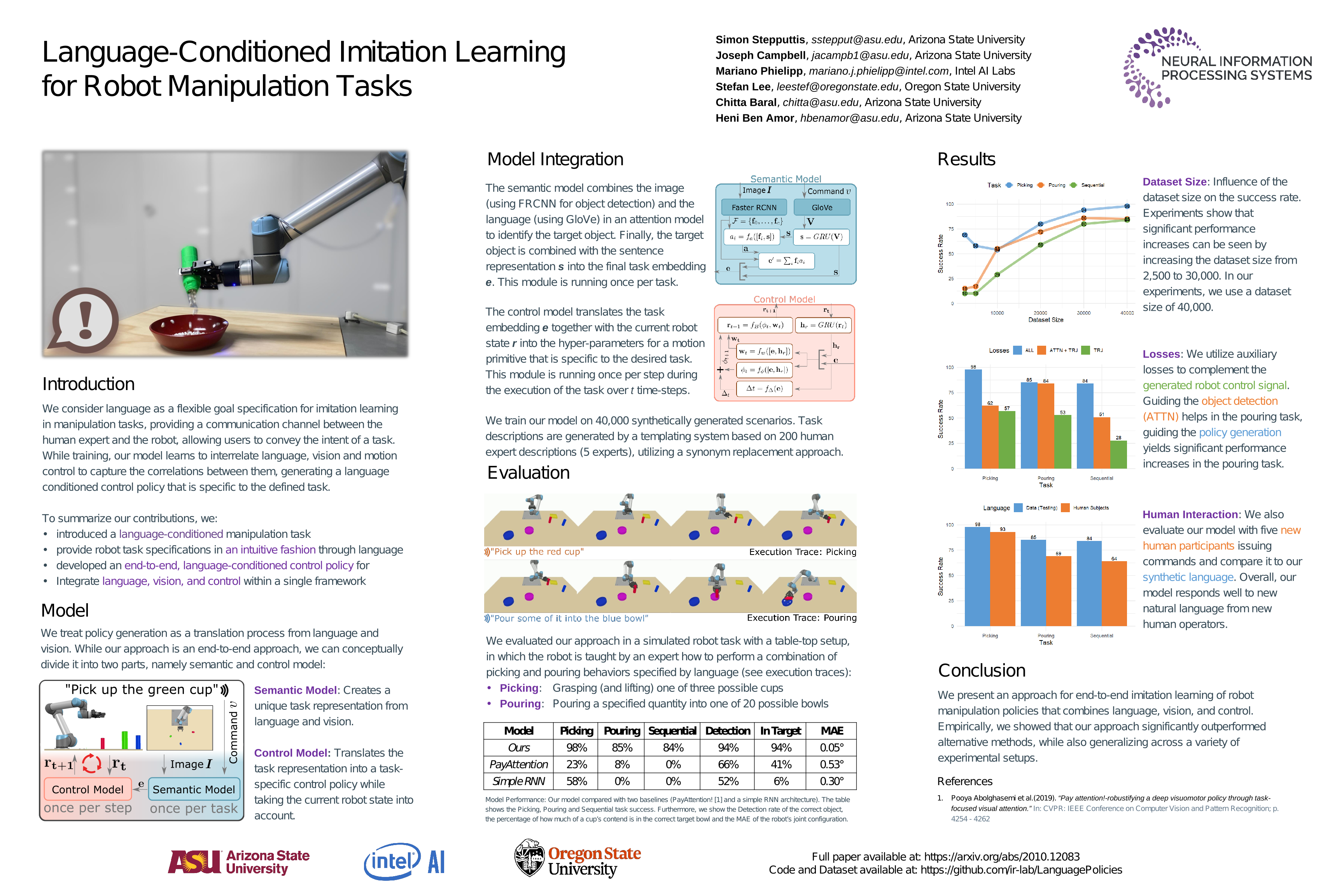

NeurIPS 2020 (Spotlight): Language-Conditioned Imitation Learning for Robot Manipulation Tasks

Simon Stepputtis, Joseph Campbell, Mariano Phielipp, Stefan Lee, Chitta Baral, Heni Ben Amor

Imitation learning is a popular approach for teaching motor skills to robots. However, most approaches focus on extracting policy parameters from execution traces alone, i.e., motion trajectories and perceptual data. No adequate communication channel between the human expert and the robot exists in order to describe critical aspects of the task, such as the properties of the target object or the intended shape of the motion. Motivated by insights into the human teaching process, we introduce a method for incorporating unstructured natural language into imitation learning. At training time, the expert can provide demonstrations along with verbal descriptions in order to describe the underlying intent, e.g., “Go to the large green bowl”. The training process, then, interrelates the different modalities to encode the correlations between language, perception, and motion. The resulting language-conditioned visuomotor policies can be conditioned at run time on new human commands and instructions, which allows for more fine-grained control over the trained policies while also reducing situational ambiguity. We demonstrate in a set of simulation experiments how the introduced approach can learn language-conditioned manipulation policies for a seven degree-of-freedom robot arm and compare the results to a variety of alternative methods.

Citation

@misc{stepputtis2020languageconditioned,

title={Language-Conditioned Imitation Learning for Robot Manipulation Tasks},

author={Simon Stepputtis and Joseph Campbell and Mariano Phielipp and Stefan Lee and Chitta Baral and Heni Ben Amor},

year={2020},

eprint={2010.12083},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

Introduction Video

Acknowledgments

This work was supported by a grant from the Interplanetary Initiative at Arizona State University. We would like to thank Lindy Elkins-Tanton, Katsu Yamane, and Benjamin Kuipers for their valuable insights and feedback during the early stages of this project.